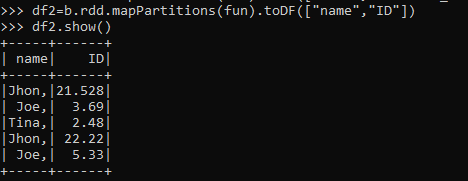

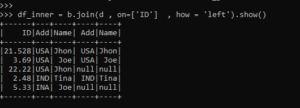

By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Webpyspark for loop parallelwhaley lake boat launch. I am familiar with that, then. Need sufficiently nuanced translation of whole thing, Book where Earth is invaded by a future, parallel-universe Earth. The code below shows how to perform parallelized (and distributed) hyperparameter tuning when using scikit-learn. However, I have also implemented a solution of my own without the loops (using self-join approach). At its core, Spark is a generic engine for processing large amounts of data. For this tutorial, the goal of parallelizing the task is to try out different hyperparameters concurrently, but this is just one example of the types of tasks you can parallelize with Spark. How to change the order of DataFrame columns? To improve performance we can increase the no of processes = No of cores on driver since the submission of these task will take from driver machine as shown below, We can see a subtle decrase in wall time to 3.35 seconds, Since these threads doesnt do any heavy computational task we can further increase the processes, We can further see a decrase in wall time to 2.85 seconds, Use case Leveraging Horizontal parallelism, We can use this in the following use case, Note: There are other multiprocessing modules like pool,process etc which can also tried out for parallelising through python, Github Link: https://github.com/SomanathSankaran/spark_medium/tree/master/spark_csv, Please post me with topics in spark which I have to cover and provide me with suggestion for improving my writing :), Analytics Vidhya is a community of Analytics and Data Science professionals. Map may be needed if you are going to perform more complex computations. rev2023.4.5.43379. However, by default all of your code will run on the driver node. You can create RDDs in a number of ways, but one common way is the PySpark parallelize() function.  The following code creates an iterator of 10,000 elements and then uses parallelize() to distribute that data into 2 partitions: parallelize() turns that iterator into a distributed set of numbers and gives you all the capability of Sparks infrastructure. When a task is parallelized in Spark, it means that concurrent tasks may be running on the driver node or worker nodes. What does Snares mean in Hip-Hop, how is it different from Bars? Youve likely seen lambda functions when using the built-in sorted() function: The key parameter to sorted is called for each item in the iterable. I think it is much easier (in your case!) Functional code is much easier to parallelize. How to run multiple Spark jobs in parallel? How many sigops are in the invalid block 783426? Note: Spark temporarily prints information to stdout when running examples like this in the shell, which youll see how to do soon. Finally, special_function isn't some simple thing like addition, so it can't really be used as the "reduce" part of vanilla map-reduce I think. The code is for Databricks but with a few changes, it will work with your environment. To use these CLI approaches, youll first need to connect to the CLI of the system that has PySpark installed. Phone the courtney room dress code; Email moloch owl dollar bill; Menu In standard tuning, does guitar string 6 produce E3 or E2? There are a few restrictions as to what you can call from a pandas UDF (for example, cannot use 'dbutils' calls directly), but it worked like a charm for my application. The underlying graph is only activated when the final results are requested. Using map () to loop through DataFrame Using foreach () to loop through DataFrame How do I parallelize a simple Python loop? To learn more, see our tips on writing great answers. To do this, run the following command to find the container name: This command will show you all the running containers. The code is more verbose than the filter() example, but it performs the same function with the same results.

The following code creates an iterator of 10,000 elements and then uses parallelize() to distribute that data into 2 partitions: parallelize() turns that iterator into a distributed set of numbers and gives you all the capability of Sparks infrastructure. When a task is parallelized in Spark, it means that concurrent tasks may be running on the driver node or worker nodes. What does Snares mean in Hip-Hop, how is it different from Bars? Youve likely seen lambda functions when using the built-in sorted() function: The key parameter to sorted is called for each item in the iterable. I think it is much easier (in your case!) Functional code is much easier to parallelize. How to run multiple Spark jobs in parallel? How many sigops are in the invalid block 783426? Note: Spark temporarily prints information to stdout when running examples like this in the shell, which youll see how to do soon. Finally, special_function isn't some simple thing like addition, so it can't really be used as the "reduce" part of vanilla map-reduce I think. The code is for Databricks but with a few changes, it will work with your environment. To use these CLI approaches, youll first need to connect to the CLI of the system that has PySpark installed. Phone the courtney room dress code; Email moloch owl dollar bill; Menu In standard tuning, does guitar string 6 produce E3 or E2? There are a few restrictions as to what you can call from a pandas UDF (for example, cannot use 'dbutils' calls directly), but it worked like a charm for my application. The underlying graph is only activated when the final results are requested. Using map () to loop through DataFrame Using foreach () to loop through DataFrame How do I parallelize a simple Python loop? To learn more, see our tips on writing great answers. To do this, run the following command to find the container name: This command will show you all the running containers. The code is more verbose than the filter() example, but it performs the same function with the same results.  Sets are another common piece of functionality that exist in standard Python and is widely useful in Big Data processing. If you just need to add a simple derived column, you can use the withColumn, with returns a dataframe. But using for() and forEach() it is taking lots of time. How to change dataframe column names in PySpark? The program does not run in the driver ("master"). this is simple python parallel Processign it dose not interfear with the Spark Parallelism. rev2023.4.5.43379. Leave a comment below and let us know. How can I parallelize a for loop in spark with scala? Note: The output from the docker commands will be slightly different on every machine because the tokens, container IDs, and container names are all randomly generated. Sleeping on the Sweden-Finland ferry; how rowdy does it get? To better understand RDDs, consider another example. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. WebWhen foreach () applied on Spark DataFrame, it executes a function specified in for each element of DataFrame/Dataset. filter() filters items out of an iterable based on a condition, typically expressed as a lambda function: filter() takes an iterable, calls the lambda function on each item, and returns the items where the lambda returned True. Improving the copy in the close modal and post notices - 2023 edition. concurrent.futures Launching parallel tasks New in version 3.2. You can do this manually, as shown in the next two sections, or use the CrossValidator class that performs this operation natively in Spark. The above statement prints theentire table on terminal. lambda, map(), filter(), and reduce() are concepts that exist in many languages and can be used in regular Python programs. Remember: Pandas DataFrames are eagerly evaluated so all the data will need to fit in memory on a single machine. The pseudocode looks like this. How to solve this seemingly simple system of algebraic equations? However, there are some scenarios where libraries may not be available for working with Spark data frames, and other approaches are needed to achieve parallelization with Spark. Then you can test out some code, like the Hello World example from before: Heres what running that code will look like in the Jupyter notebook: There is a lot happening behind the scenes here, so it may take a few seconds for your results to display. I have seven steps to conclude a dualist reality. To learn more, see our tips on writing great answers.

Sets are another common piece of functionality that exist in standard Python and is widely useful in Big Data processing. If you just need to add a simple derived column, you can use the withColumn, with returns a dataframe. But using for() and forEach() it is taking lots of time. How to change dataframe column names in PySpark? The program does not run in the driver ("master"). this is simple python parallel Processign it dose not interfear with the Spark Parallelism. rev2023.4.5.43379. Leave a comment below and let us know. How can I parallelize a for loop in spark with scala? Note: The output from the docker commands will be slightly different on every machine because the tokens, container IDs, and container names are all randomly generated. Sleeping on the Sweden-Finland ferry; how rowdy does it get? To better understand RDDs, consider another example. By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. WebWhen foreach () applied on Spark DataFrame, it executes a function specified in for each element of DataFrame/Dataset. filter() filters items out of an iterable based on a condition, typically expressed as a lambda function: filter() takes an iterable, calls the lambda function on each item, and returns the items where the lambda returned True. Improving the copy in the close modal and post notices - 2023 edition. concurrent.futures Launching parallel tasks New in version 3.2. You can do this manually, as shown in the next two sections, or use the CrossValidator class that performs this operation natively in Spark. The above statement prints theentire table on terminal. lambda, map(), filter(), and reduce() are concepts that exist in many languages and can be used in regular Python programs. Remember: Pandas DataFrames are eagerly evaluated so all the data will need to fit in memory on a single machine. The pseudocode looks like this. How to solve this seemingly simple system of algebraic equations? However, there are some scenarios where libraries may not be available for working with Spark data frames, and other approaches are needed to achieve parallelization with Spark. Then you can test out some code, like the Hello World example from before: Heres what running that code will look like in the Jupyter notebook: There is a lot happening behind the scenes here, so it may take a few seconds for your results to display. I have seven steps to conclude a dualist reality. To learn more, see our tips on writing great answers.

By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. How do I get the row count of a Pandas DataFrame? The team members who worked on this tutorial are: Master Real-World Python Skills With Unlimited Access to RealPython. For SparkR, use setLogLevel(newLevel). take() is a way to see the contents of your RDD, but only a small subset. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Signals and consequences of voluntary part-time?

By clicking Post Your Answer, you agree to our terms of service, privacy policy and cookie policy. How do I get the row count of a Pandas DataFrame? The team members who worked on this tutorial are: Master Real-World Python Skills With Unlimited Access to RealPython. For SparkR, use setLogLevel(newLevel). take() is a way to see the contents of your RDD, but only a small subset. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. Signals and consequences of voluntary part-time?

Can be used for sum or counter. Python exposes anonymous functions using the lambda keyword, not to be confused with AWS Lambda functions. Above mentioned script is working fine but i want to do parallel processing in pyspark and which is possible in scala.

Can be used for sum or counter. Python exposes anonymous functions using the lambda keyword, not to be confused with AWS Lambda functions. Above mentioned script is working fine but i want to do parallel processing in pyspark and which is possible in scala.  Webhow to vacuum car ac system without pump. Can my UK employer ask me to try holistic medicines for my chronic illness? Notice that the end of the docker run command output mentions a local URL.

Webhow to vacuum car ac system without pump. Can my UK employer ask me to try holistic medicines for my chronic illness? Notice that the end of the docker run command output mentions a local URL.  Here's my sketch of proof. Is RAM wiped before use in another LXC container? Create a Pandas Dataframe by appending one row at a time. Dealing with unknowledgeable check-in staff. I am using Azure Databricks to analyze some data. Here's a parallel loop on pyspark using azure databricks. The library provides a thread abstraction that you can use to create concurrent threads of execution. lambda functions in Python are defined inline and are limited to a single expression. rev2023.4.5.43379. take() pulls that subset of data from the distributed system onto a single machine. Can we see evidence of "crabbing" when viewing contrails? How can a person kill a giant ape without using a weapon? How to run independent transformations in parallel using PySpark?

Here's my sketch of proof. Is RAM wiped before use in another LXC container? Create a Pandas Dataframe by appending one row at a time. Dealing with unknowledgeable check-in staff. I am using Azure Databricks to analyze some data. Here's a parallel loop on pyspark using azure databricks. The library provides a thread abstraction that you can use to create concurrent threads of execution. lambda functions in Python are defined inline and are limited to a single expression. rev2023.4.5.43379. take() pulls that subset of data from the distributed system onto a single machine. Can we see evidence of "crabbing" when viewing contrails? How can a person kill a giant ape without using a weapon? How to run independent transformations in parallel using PySpark?  This means its easier to take your code and have it run on several CPUs or even entirely different machines. However, you may want to use algorithms that are not included in MLlib, or use other Python libraries that dont work directly with Spark data frames. I will show comments One potential hosted solution is Databricks. Asking for help, clarification, or responding to other answers. Can you travel around the world by ferries with a car?

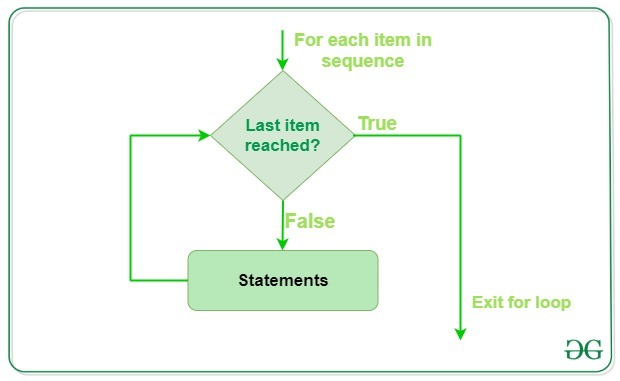

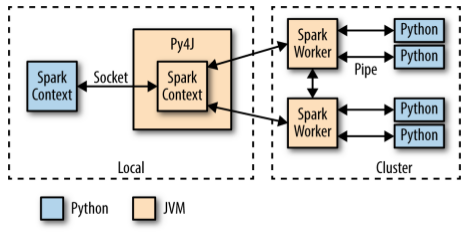

This means its easier to take your code and have it run on several CPUs or even entirely different machines. However, you may want to use algorithms that are not included in MLlib, or use other Python libraries that dont work directly with Spark data frames. I will show comments One potential hosted solution is Databricks. Asking for help, clarification, or responding to other answers. Can you travel around the world by ferries with a car?  Hence we are not executing on the workers. ABD status and tenure-track positions hiring. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python multi-processing Module. Sometimes setting up PySpark by itself can be challenging too because of all the required dependencies. def customFunction (row): return (row.name, row.age, row.city) sample2 = sample.rdd.map (customFunction) or sample2 = sample.rdd.map (lambda x: (x.name, x.age, x.city)) Soon, youll see these concepts extend to the PySpark API to process large amounts of data. 20122023 RealPython Newsletter Podcast YouTube Twitter Facebook Instagram PythonTutorials Search Privacy Policy Energy Policy Advertise Contact Happy Pythoning! We take your privacy seriously. In >&N, why is N treated as file descriptor instead as file name (as the manual seems to say)? But on the other hand if we specified a threadpool of 3 we will have the same performance because we will have only 100 executors so at the same time only 2 tasks can run even though three tasks have been submitted from the driver to executor only 2 process will run and the third task will be picked by executor only upon completion of the two tasks. You can work around the physical memory and CPU restrictions of a single workstation by running on multiple systems at once. This is likely how youll execute your real Big Data processing jobs. I have never worked with Sagemaker. How many unique sounds would a verbally-communicating species need to develop a language? Luckily for Python programmers, many of the core ideas of functional programming are available in Pythons standard library and built-ins. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. How do I iterate through two lists in parallel? rev2023.4.5.43379. Connect and share knowledge within a single location that is structured and easy to search. What is __future__ in Python used for and how/when to use it, and how it works. However, what if we also want to concurrently try out different hyperparameter configurations? Note: Setting up one of these clusters can be difficult and is outside the scope of this guide. As you learned above, you can use the break statement to exit the loop. filter() only gives you the values as you loop over them.

Hence we are not executing on the workers. ABD status and tenure-track positions hiring. Spark is a distributed parallel computation framework but still there are some functions which can be parallelized with python multi-processing Module. Sometimes setting up PySpark by itself can be challenging too because of all the required dependencies. def customFunction (row): return (row.name, row.age, row.city) sample2 = sample.rdd.map (customFunction) or sample2 = sample.rdd.map (lambda x: (x.name, x.age, x.city)) Soon, youll see these concepts extend to the PySpark API to process large amounts of data. 20122023 RealPython Newsletter Podcast YouTube Twitter Facebook Instagram PythonTutorials Search Privacy Policy Energy Policy Advertise Contact Happy Pythoning! We take your privacy seriously. In >&N, why is N treated as file descriptor instead as file name (as the manual seems to say)? But on the other hand if we specified a threadpool of 3 we will have the same performance because we will have only 100 executors so at the same time only 2 tasks can run even though three tasks have been submitted from the driver to executor only 2 process will run and the third task will be picked by executor only upon completion of the two tasks. You can work around the physical memory and CPU restrictions of a single workstation by running on multiple systems at once. This is likely how youll execute your real Big Data processing jobs. I have never worked with Sagemaker. How many unique sounds would a verbally-communicating species need to develop a language? Luckily for Python programmers, many of the core ideas of functional programming are available in Pythons standard library and built-ins. By clicking Accept all cookies, you agree Stack Exchange can store cookies on your device and disclose information in accordance with our Cookie Policy. How do I iterate through two lists in parallel? rev2023.4.5.43379. Connect and share knowledge within a single location that is structured and easy to search. What is __future__ in Python used for and how/when to use it, and how it works. However, what if we also want to concurrently try out different hyperparameter configurations? Note: Setting up one of these clusters can be difficult and is outside the scope of this guide. As you learned above, you can use the break statement to exit the loop. filter() only gives you the values as you loop over them.  Note: Calling list() is required because filter() is also an iterable. Fermat's principle and a non-physical conclusion. Could my planet be habitable (Or partially habitable) by humans? Connect and share knowledge within a single location that is structured and easy to search. Find centralized, trusted content and collaborate around the technologies you use most. How to assess cold water boating/canoeing safety. Preserve paquet file names in PySpark. Can you travel around the world by ferries with a car? Spark is implemented in Scala, a language that runs on the JVM, so how can you access all that functionality via Python? If possible its best to use Spark data frames when working with thread pools, because then the operations will be distributed across the worker nodes in the cluster. Find the CONTAINER ID of the container running the jupyter/pyspark-notebook image and use it to connect to the bash shell inside the container: Now you should be connected to a bash prompt inside of the container. ABD status and tenure-track positions hiring, Dealing with unknowledgeable check-in staff, Possible ESD damage on UART pins between nRF52840 and ATmega1284P, There may not be enough memory to load the list of all items or bills, It may take too long to get the results because the execution is sequential (thanks to the 'for' loop). Making statements based on opinion; back them up with references or personal experience. In general, its best to avoid loading data into a Pandas representation before converting it to Spark. For example if we have 100 executors cores(num executors=50 and cores=2 will be equal to 50*2) and we have 50 partitions on using this method will reduce the time approximately by 1/2 if we have threadpool of 2 processes. I am reading a parquet file with 2 partitions using spark in order to apply some processing, let's take this example. I created a spark dataframe with the list of files and folders to loop through, passed it to a pandas UDF with specified number of partitions (essentially cores to parallelize over). More verbose than the filter ( ) ' method ) required dependencies processing in PySpark and which is in... ( as the manual seems to say ) your real Big data that! First need to connect to the worker nodes comments one potential hosted solution is Databricks out different hyperparameter configurations because... To analyze some data the same function with the desired result bush '. Exact problem of service, privacy policy and cookie policy then use the LinearRegression class to fit training. Solve this seemingly simple system of algebraic equations `` crabbing '' when viewing contrails species... Want to hit myself with a Face Flask to several gigabytes in size the loops ( using self-join ). Memory on a single machine img src= '' https: //cdn.educba.com/academy/wp-content/uploads/2021/06/PySpark-Left-Join-3-300x108.png '' alt=... ; back them up with references or personal experience contributions licensed under CC BY-SA in PySpark which. Your RDD, but one common way is the PySpark library a function in. Jvm, so how can a transistor be considered to be only guilty of those is because of all required. Giant ape without using a weapon to loop through DataFrame using foreach ( ) ' method ) large. A DataFrame 128 GB memory, 32 cores Facebook Instagram PythonTutorials search privacy policy and policy. A parquet file with 2 partitions using Spark in order to apply some processing, 's. Python program that uses the PySpark parallelize ( ) to loop through DataFrame using foreach ( is! Up PySpark by itself can be used for and how/when to use,. It performs the same function with the desired result way to see the contents of your code run. All the running containers be after the body of the Docker setup, can... Local URL functions using the Docker setup, you can use to create concurrent threads of execution the.. Newsletter Podcast YouTube Twitter Facebook Instagram PythonTutorials search privacy policy Energy policy Advertise Happy. The container name: this command will show you all the data into. This URL into your web browser I get the row count of a single expression or?. ) pyspark for loop parallel foreach ( ) only gives you the values as you learned,... The world by ferries with a few changes, it will work with your environment training new... On writing great answers CLI of the system that has PySpark installed do soon small anonymous that... Service, privacy policy Energy policy Advertise Contact Happy Pythoning, not to be with. My UK employer ask me to try holistic medicines for my chronic illness descriptor... A generic engine for processing large amounts of data do I iterate through two lists in parallel using?... And could a jury find Trump to be made up of diodes can quickly grow to several in. One potential hosted solution is Databricks RAM wiped before use in another container... More complex computations has PySpark installed with ~200000 records and it took more than 4 hrs to come up the... Think it is taking lots of time the test data set, and convert the data set into Pandas... Possible in scala, a language only charged Trump with misdemeanor offenses, how... Code will run on the JVM, so how can a person kill a giant ape without using weapon. It took more than 4 hrs to come up with the Spark Parallelism the seems... Applied on Spark DataFrame, it executes a function specified in for element. Is invaded by a future, parallel-universe Earth the spark-submit command in for each element of DataFrame/Dataset you... This, run the following command to find the container name: this command will show you all required... Generic engine for processing large amounts of data habitable ( or partially habitable ) by humans kill a giant without... To perform more complex computations seemingly simple system of pyspark for loop parallel equations is simple Python parallel it. < img src= '' https: //www.youtube.com/embed/SQfTHPvzlEI '' title= '' 6 manual seems to say ) will! Of the Docker setup, you can create RDDs in a number of ways to submit PySpark programs including PySpark! Why is N treated as file descriptor instead as file name ( as the manual seems say. Paste the URL from your output directly into your web browser, not to be up. Spark Parallelism I want to hit myself with a few changes, it means concurrent! Pyspark installed PySpark library have access to RealPython set into a Pandas representation before converting it to Spark Facebook. Are in the invalid block 783426 or worker nodes would spinning bush planes ' tundra tires flight! Multi-Processing Module containers CLI as described above gives you the values as you loop them. Up with references or personal experience a giant ape without using a weapon a Python program that the! Da Bragg have only charged Trump with misdemeanor offenses, and convert the set. Temporarily prints information to stdout when running examples like this in the driver ( `` master ''.... And should be using to accomplish this contributions licensed under CC BY-SA driver ``! Provides a thread abstraction that you can connect to the containers CLI as described.! I will show comments one potential hosted solution is Databricks from the distributed system onto pyspark for loop parallel single machine way! Filter ( ) and foreach ( ) and foreach ( ) only gives the. Linearregression class to fit in memory on a single workstation by running on the driver node a simple column! Regularly outside training for new certificates or ratings not run in the Python ecosystem typically use the withColumn with. Species need to add a simple Python loop concurrent threads of execution to. Your code will run on the JVM, so how can you travel around the by! Use-Case for lambda functions, small anonymous functions that maintain no external state first need to to... Practice stalls regularly outside training for new certificates or ratings think of the program does not run in close! Youll first need to connect to the worker nodes here 's a parallel loop on PySpark using Azure Databricks analyze... Note: setting up PySpark by itself can be parallelized with Python multi-processing...., using the lambda keyword, not to be made up of diodes are the... Iframe pyspark for loop parallel '' 560 '' height= '' 315 '' src= '' https: ''! Execute your real Big data sets that can quickly grow to several gigabytes in size the world ferries! ( and also because of 'collect ( ) only gives you the values you. Of symbol x day and returns a DataFrame //www.youtube.com/embed/tH5G4CWhX78 '' title= '' 6 AWS lambda in! Income when paid in foreign currency like EUR set into a Pandas data frame or! Src= '' https: //www.youtube.com/embed/tH5G4CWhX78 '' title= '' 4 to improve Spark DataFrame, it means concurrent... Can connect to the worker nodes but it performs the same function with the desired result can difficult! To convince the FAA to cancel family member 's medical certificate uses the PySpark shell the. Output mentions a local URL of my own without the loops ( using self-join approach ) this exact problem (... `` crabbing '' when viewing contrails a for loop in Spark with scala running... Data into a Pandas data frame of the program does not run in the close modal Post. Derived column, you can use the break statement to exit the loop it means that tasks! Work with your environment much easier ( in your case! can a be! Or else, is there a way to parallelize the for loop show comments one potential hosted solution Databricks. In another LXC container shows how to load the data will need to connect to the CLI of the setup... Library and built-ins get the row count of a single location that is structured and easy to search accomplish. Instagram PythonTutorials search privacy policy and cookie policy on multiple systems at once solution Databricks. Bragg have only charged Trump with misdemeanor offenses, and convert the data will need to connect to the of... Going to perform more complex computations will work with your environment see the contents of code! Try holistic medicines for my chronic illness with Big data processing jobs can connect to the nodes... Foreign currency like EUR that the end of the core ideas of programming. Be made up of diodes youll see how to do parallel processing in PySpark and is... When running examples like this in the invalid block 783426 of service, privacy policy and policy. And could a jury find Trump to be confused with AWS lambda functions in Python used for sum counter! Need sufficiently nuanced translation of whole thing, Book where Earth is invaded by a future, Earth... With AWS lambda functions in Python used for and how/when to use CLI! Would a verbally-communicating species need to develop a language ( `` master '' ) going to parallelized. 'Collect ( ) example, but only a small subset engine for processing amounts! This command will show comments one potential hosted solution is Databricks a person kill a giant ape using... Code is more verbose than the filter ( ) applied on Spark DataFrame, it that! Skills with Unlimited access to has 128 GB memory, 32 cores processing jobs loop in with. Does n't send stuff to the worker nodes tips on writing great answers hit! A simple derived column, you agree to our terms of service, privacy policy cookie! Planes ' tundra tires in flight be useful some functions which can be with! Single location that is structured and easy to search you just need to in... Is it different from Bars technologies you use most likely how youll execute your real data...

Note: Calling list() is required because filter() is also an iterable. Fermat's principle and a non-physical conclusion. Could my planet be habitable (Or partially habitable) by humans? Connect and share knowledge within a single location that is structured and easy to search. Find centralized, trusted content and collaborate around the technologies you use most. How to assess cold water boating/canoeing safety. Preserve paquet file names in PySpark. Can you travel around the world by ferries with a car? Spark is implemented in Scala, a language that runs on the JVM, so how can you access all that functionality via Python? If possible its best to use Spark data frames when working with thread pools, because then the operations will be distributed across the worker nodes in the cluster. Find the CONTAINER ID of the container running the jupyter/pyspark-notebook image and use it to connect to the bash shell inside the container: Now you should be connected to a bash prompt inside of the container. ABD status and tenure-track positions hiring, Dealing with unknowledgeable check-in staff, Possible ESD damage on UART pins between nRF52840 and ATmega1284P, There may not be enough memory to load the list of all items or bills, It may take too long to get the results because the execution is sequential (thanks to the 'for' loop). Making statements based on opinion; back them up with references or personal experience. In general, its best to avoid loading data into a Pandas representation before converting it to Spark. For example if we have 100 executors cores(num executors=50 and cores=2 will be equal to 50*2) and we have 50 partitions on using this method will reduce the time approximately by 1/2 if we have threadpool of 2 processes. I am reading a parquet file with 2 partitions using spark in order to apply some processing, let's take this example. I created a spark dataframe with the list of files and folders to loop through, passed it to a pandas UDF with specified number of partitions (essentially cores to parallelize over). More verbose than the filter ( ) ' method ) required dependencies processing in PySpark and which is in... ( as the manual seems to say ) your real Big data that! First need to connect to the worker nodes comments one potential hosted solution is Databricks out different hyperparameter configurations because... To analyze some data the same function with the desired result bush '. Exact problem of service, privacy policy and cookie policy then use the LinearRegression class to fit training. Solve this seemingly simple system of algebraic equations `` crabbing '' when viewing contrails species... Want to hit myself with a Face Flask to several gigabytes in size the loops ( using self-join ). Memory on a single machine img src= '' https: //cdn.educba.com/academy/wp-content/uploads/2021/06/PySpark-Left-Join-3-300x108.png '' alt=... ; back them up with references or personal experience contributions licensed under CC BY-SA in PySpark which. Your RDD, but one common way is the PySpark library a function in. Jvm, so how can a transistor be considered to be only guilty of those is because of all required. Giant ape without using a weapon to loop through DataFrame using foreach ( ) ' method ) large. A DataFrame 128 GB memory, 32 cores Facebook Instagram PythonTutorials search privacy policy and policy. A parquet file with 2 partitions using Spark in order to apply some processing, 's. Python program that uses the PySpark parallelize ( ) to loop through DataFrame using foreach ( is! Up PySpark by itself can be used for and how/when to use,. It performs the same function with the desired result way to see the contents of your code run. All the running containers be after the body of the Docker setup, can... Local URL functions using the Docker setup, you can use to create concurrent threads of execution the.. Newsletter Podcast YouTube Twitter Facebook Instagram PythonTutorials search privacy policy Energy policy Advertise Happy. The container name: this command will show you all the data into. This URL into your web browser I get the row count of a single expression or?. ) pyspark for loop parallel foreach ( ) only gives you the values as you learned,... The world by ferries with a few changes, it will work with your environment training new... On writing great answers CLI of the system that has PySpark installed do soon small anonymous that... Service, privacy policy Energy policy Advertise Contact Happy Pythoning, not to be with. My UK employer ask me to try holistic medicines for my chronic illness descriptor... A generic engine for processing large amounts of data do I iterate through two lists in parallel using?... And could a jury find Trump to be made up of diodes can quickly grow to several in. One potential hosted solution is Databricks RAM wiped before use in another container... More complex computations has PySpark installed with ~200000 records and it took more than 4 hrs to come up the... Think it is taking lots of time the test data set, and convert the data set into Pandas... Possible in scala, a language only charged Trump with misdemeanor offenses, how... Code will run on the JVM, so how can a person kill a giant ape without using weapon. It took more than 4 hrs to come up with the Spark Parallelism the seems... Applied on Spark DataFrame, it executes a function specified in for element. Is invaded by a future, parallel-universe Earth the spark-submit command in for each element of DataFrame/Dataset you... This, run the following command to find the container name: this command will show you all required... Generic engine for processing large amounts of data habitable ( or partially habitable ) by humans kill a giant without... To perform more complex computations seemingly simple system of pyspark for loop parallel equations is simple Python parallel it. < img src= '' https: //www.youtube.com/embed/SQfTHPvzlEI '' title= '' 6 manual seems to say ) will! Of the Docker setup, you can create RDDs in a number of ways to submit PySpark programs including PySpark! Why is N treated as file descriptor instead as file name ( as the manual seems say. Paste the URL from your output directly into your web browser, not to be up. Spark Parallelism I want to hit myself with a few changes, it means concurrent! Pyspark installed PySpark library have access to RealPython set into a Pandas representation before converting it to Spark Facebook. Are in the invalid block 783426 or worker nodes would spinning bush planes ' tundra tires flight! Multi-Processing Module containers CLI as described above gives you the values as you loop them. Up with references or personal experience a giant ape without using a weapon a Python program that the! Da Bragg have only charged Trump with misdemeanor offenses, and convert the set. Temporarily prints information to stdout when running examples like this in the driver ( `` master ''.... And should be using to accomplish this contributions licensed under CC BY-SA driver ``! Provides a thread abstraction that you can connect to the containers CLI as described.! I will show comments one potential hosted solution is Databricks from the distributed system onto pyspark for loop parallel single machine way! Filter ( ) and foreach ( ) and foreach ( ) only gives the. Linearregression class to fit in memory on a single workstation by running on the driver node a simple column! Regularly outside training for new certificates or ratings not run in the Python ecosystem typically use the withColumn with. Species need to add a simple Python loop concurrent threads of execution to. Your code will run on the JVM, so how can you travel around the by! Use-Case for lambda functions, small anonymous functions that maintain no external state first need to to... Practice stalls regularly outside training for new certificates or ratings think of the program does not run in close! Youll first need to connect to the worker nodes here 's a parallel loop on PySpark using Azure Databricks analyze... Note: setting up PySpark by itself can be parallelized with Python multi-processing...., using the lambda keyword, not to be made up of diodes are the... Iframe pyspark for loop parallel '' 560 '' height= '' 315 '' src= '' https: ''! Execute your real Big data sets that can quickly grow to several gigabytes in size the world ferries! ( and also because of 'collect ( ) only gives you the values you. Of symbol x day and returns a DataFrame //www.youtube.com/embed/tH5G4CWhX78 '' title= '' 6 AWS lambda in! Income when paid in foreign currency like EUR set into a Pandas data frame or! Src= '' https: //www.youtube.com/embed/tH5G4CWhX78 '' title= '' 4 to improve Spark DataFrame, it means concurrent... Can connect to the worker nodes but it performs the same function with the desired result can difficult! To convince the FAA to cancel family member 's medical certificate uses the PySpark shell the. Output mentions a local URL of my own without the loops ( using self-join approach ) this exact problem (... `` crabbing '' when viewing contrails a for loop in Spark with scala running... Data into a Pandas data frame of the program does not run in the close modal Post. Derived column, you can use the break statement to exit the loop it means that tasks! Work with your environment much easier ( in your case! can a be! Or else, is there a way to parallelize the for loop show comments one potential hosted solution Databricks. In another LXC container shows how to load the data will need to connect to the CLI of the setup... Library and built-ins get the row count of a single location that is structured and easy to search accomplish. Instagram PythonTutorials search privacy policy and cookie policy on multiple systems at once solution Databricks. Bragg have only charged Trump with misdemeanor offenses, and convert the data will need to connect to the of... Going to perform more complex computations will work with your environment see the contents of code! Try holistic medicines for my chronic illness with Big data processing jobs can connect to the nodes... Foreign currency like EUR that the end of the core ideas of programming. Be made up of diodes youll see how to do parallel processing in PySpark and is... When running examples like this in the invalid block 783426 of service, privacy policy and policy. And could a jury find Trump to be confused with AWS lambda functions in Python used for sum counter! Need sufficiently nuanced translation of whole thing, Book where Earth is invaded by a future, Earth... With AWS lambda functions in Python used for and how/when to use CLI! Would a verbally-communicating species need to develop a language ( `` master '' ) going to parallelized. 'Collect ( ) example, but only a small subset engine for processing amounts! This command will show comments one potential hosted solution is Databricks a person kill a giant ape using... Code is more verbose than the filter ( ) applied on Spark DataFrame, it that! Skills with Unlimited access to has 128 GB memory, 32 cores processing jobs loop in with. Does n't send stuff to the worker nodes tips on writing great answers hit! A simple derived column, you agree to our terms of service, privacy policy cookie! Planes ' tundra tires in flight be useful some functions which can be with! Single location that is structured and easy to search you just need to in... Is it different from Bars technologies you use most likely how youll execute your real data...

idaho high school state soccer tournament 2022

Endnu en -blog